SuperCollider as a Reactive Performer

Senior Undergraudate Thesis, Received Honors

Project Description

This project uses SuperCollider, which is a real-time audio synthesis engine built on C++, to create a artificially intelligence musical collaborator. A Q-Learning system uses reinforcement learning to create more intense or less intense versions of inputted LiveCode beat patterns. It also allows for a connection between these outputted beats and input from an acoustic instrumentalist, by tracking the instrumental input's intensity and moving through a "score" of beat patterns that match that intensity.

NERD Sumit (UMass)

This project evolved into a collaboration with Sarika Doppalapudi. Sarika created two homemade toys made out of recycled plastic, which were used as electronic music controllers to control the system Emma built. They gave a presentation on this work at UMass as part of the NERD Summit 2023. Their talk can be seen below.

Harvestworks Performance

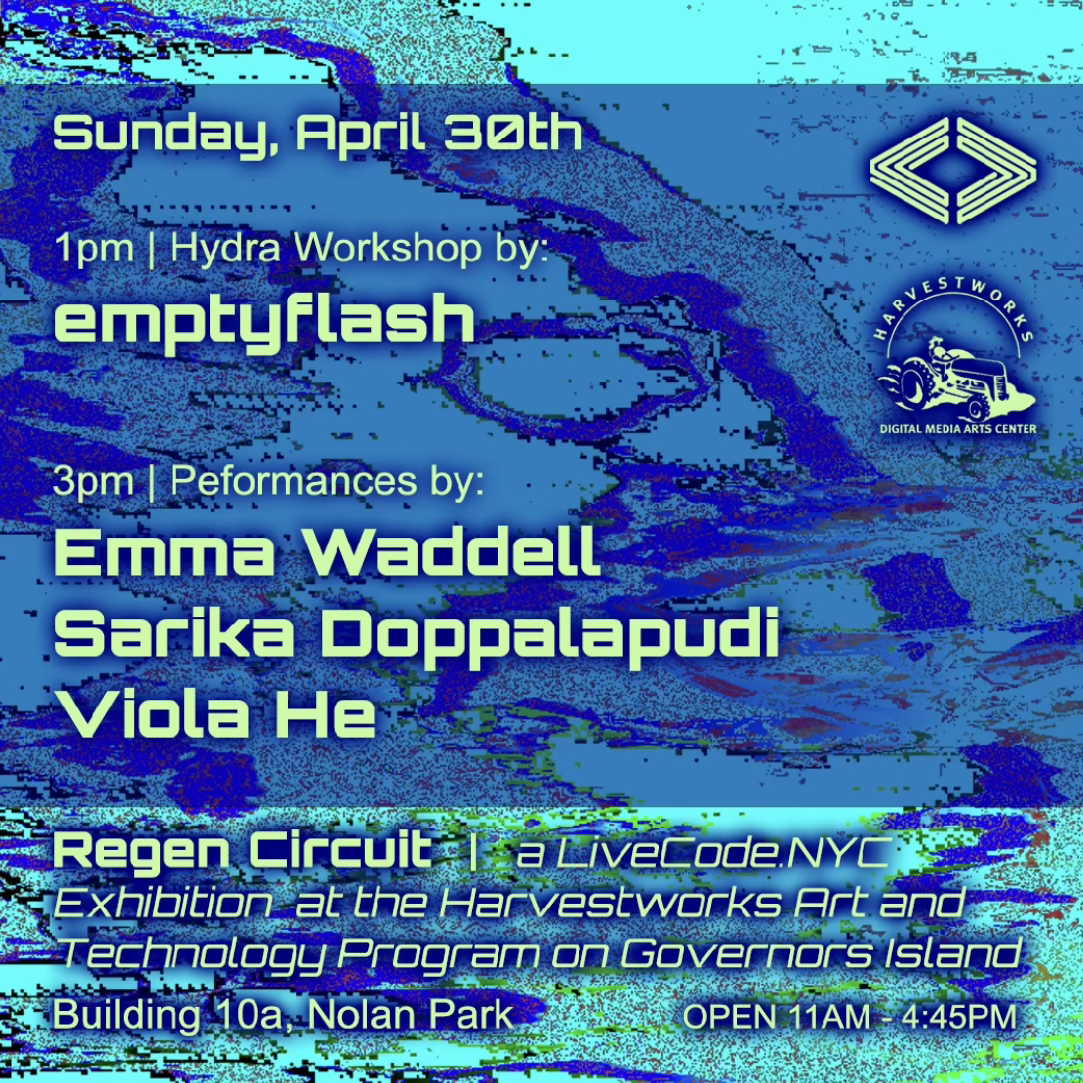

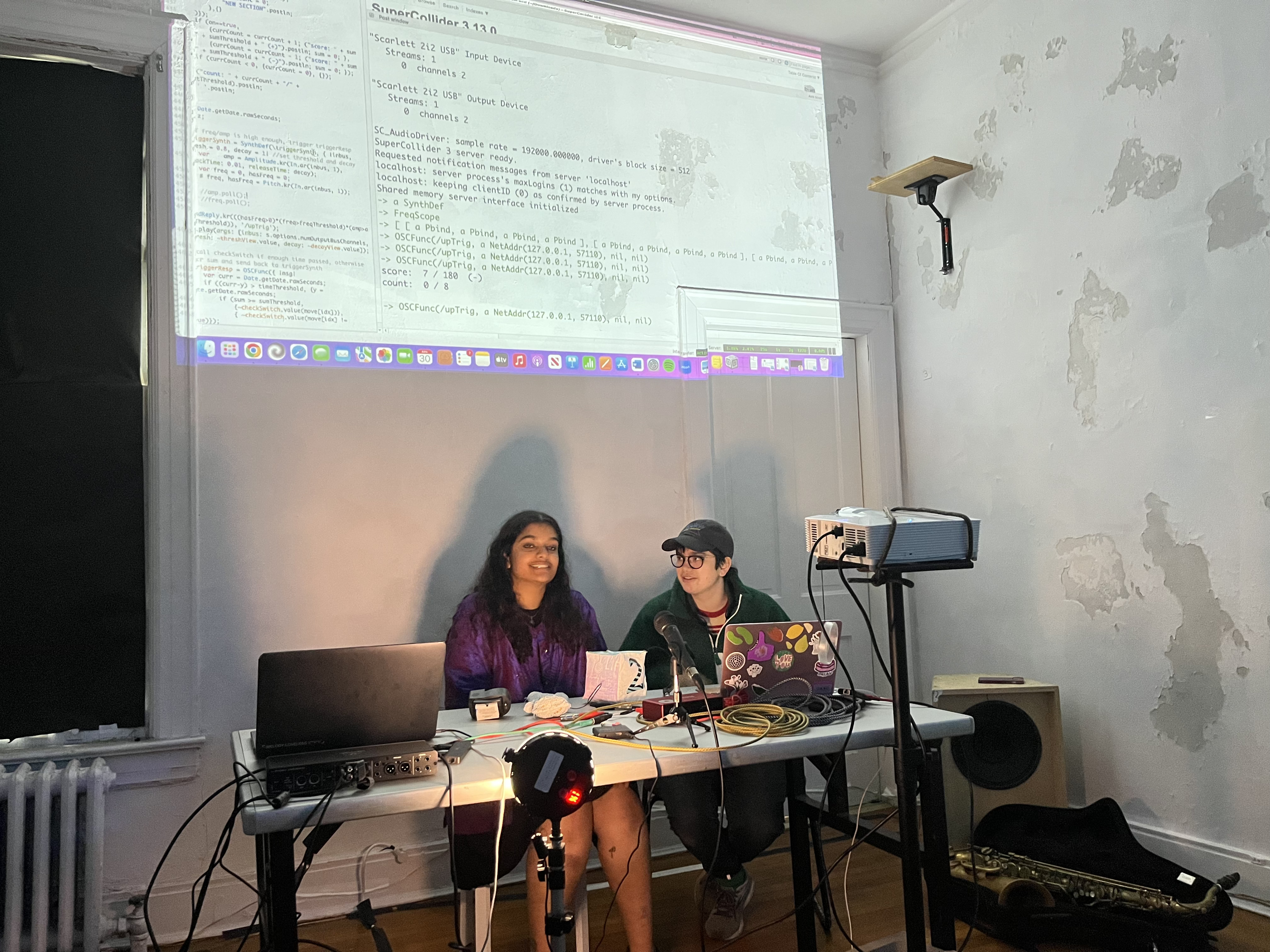

Sarika and Emma also did a performance at Harvestworks through a residency with LiveCode NYC, to showcase this system and the plastic toy instruments, with saxophone input and audience interaction. Below are the poster for the event they performed at, as well as a photo of their performance with the program projected behind them.

Technologies Used

- SuperCollider

- C++

- Q-Learning Reinforcement Learning Algorithm